|

|

privacy |

||||

|

Hangout for experimental confirmation and demonstration of software, computing, and networking. The exercises don't always work out. The professor is a bumbler and the laboratory assistant is a skanky dufus.

Blog Feed Recent Items The nfoCentrale Blog Conclave nfoCentrale Associated Sites |

2010-05-01Republishing before SilenceThe nfoCentrale blogs, including Professor von Clueless, were published through Blogger via FTP transfer to my web sites. That service is ending. As part of the migration, I am republishing this blog in the latest stable template format. Then there will be silence as Blogger is unhooked, although the pages will remain. No new posts or comments will work until I updated the web site to use its own blog engine. Once that migration is completed, posting will resume here, with details about what to know about the transition and any breakage that remains to be repaired. Meanwhile, if you are curious to watch how this works out, check on Spanner Wingnut’s Muddleware Lab. It may be in various stages of disrepair, but that blog will come under new custodianship first. Labels: web site construction 2009-05-12Command Line Utilities: What Would Purr Do?On the Linux Journal feed, Shawn Powers has a 2005-09-11 Video, “Commandline 101: cat, Not Just for Purring.” Of course, the Unix utility command “cat” is short for concatenate, and knowing that I never think of small furry animals when I see the term in the context of command-line operations. Silly me. The powers journal is a nice screencast that demonstrates the versatility of this little utility, especially in conjunction with redirection (and also piping, but that probably comes in a later lesson). Without even watching the tutorial, I was suddenly confronted with the unasked question: What would a utility named purr be good for? My first thought was creating pleasant audios of purring felines. Then there could be command-line options to control the kind of purr, and even the kind of feline. Do lions purr? That’s interesting, and perhaps fun, but a little too easy. A different challenge would be to come up with a legitimate utility function for which purr would become a completely reasonable and recognizable name. I have no idea. To confess my advanced state of cluelessness, I also have no immediate ideas about functions named fur, fly, whine, growl, snarl, snore, whimper, etc, ditto, etc. Labels: console scripting, cybersmith 2008-12-31Retiring InfoNuovo.com[cross-posted 2008-12-29T16:42Z from Orcmid’s Lair. Some of the oldest links that still use the infonuovo.com domain are related to ODMA. This post is here to catch those who might end up searching for previously-found ODMA material and wonder where it has gotten too.] I am retiring the InfoNuovo.com domain after 10 years. The domain will be cast loose at the beginning of February, 2009. Those places where there are still references to infonuovo.com need to be updated:

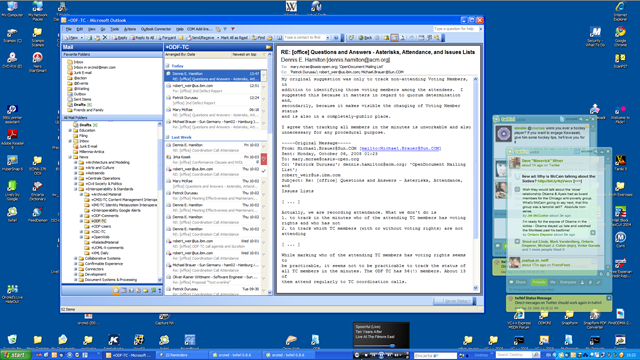

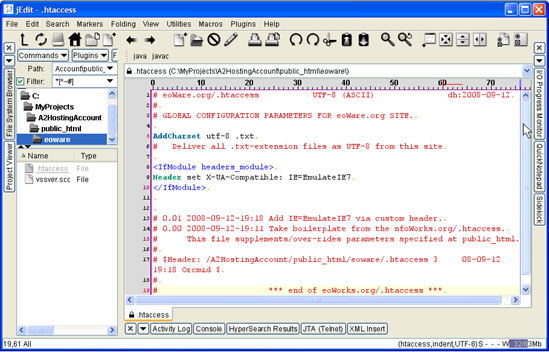

If you have an infonuovo.com bookmark and you are not sure of its replacement, simply use it and notice the URL of the destination that appears in the address bar of your browser. That is the URL that should be bookmarked. InfoNuovo.com was the first domain name that I ever rented. It was originally hosted on VServers and absorbed through acquisitions a couple of times. On March 22, 1999, I posted my first construction note on the use of InfoNuovo.com as an anchor site, a web site that houses other web sites as part of a single hosting. This was also the first step toward evolution of what I now call the construction structure of any nfoCentrale web site. InfoNuovo was the company name I had chosen for my independent consulting practice initiated on retirement from Xerox Corporation in December, 1998. When I moved from Silicon Valley to the Seattle Area in August, 1999, I found that InfoNuovo was too easily confused with a name already registered in Washington State. The business became NuovoDoc, but I continued to hold the infonuovo.com domain name for the support of the subwebs housed there. I eventually moved most content to the new anchor, nfoCentrale.net, on Microsoft bCentral. There was one problem. Although I could redirect unique domain names, such as ODMA.info, to the current anchor, the web pages still served up with the URLs of the actual location on the anchor site. I experimented with URL cloaking, but that created as many problems as it solved. In October 2006, following the lead of Ed Bott, I switched to A2 Hosting as a way to reduce the hosting fees and also take advantage of the A2 shared hosting Apache-server provisions for addon domains. Addon domains serve up with URLs of their domain even though the domain is anchored on a single hosted site (in this case, nfoCentrale.com). I consolidated all nfoCentrale.net and infonuovo.com content on nfoCentrale.com. I also parked domains nfoCentrale.net and infonuovo.com where they are today, atop nfoCentrale.com. Now, however, accessing any of the individual subwebs triggers redirection to the appropriate addon-domain URL. This took care of my wanting to have the subwebs always respond as the domains that I have as their addons. It also raised an unexpected problem around case-sensitivity of Apache filenames, a situation I am still digging my way out of. That shows how important having the addon-domain capability is to me. I’m not sure I’d have moved if I knew how difficult the case-sensitivity extrication would be though. I know that there are still infonuovo.com URLs out there, even though the addon domains have been in place for over two years. In another month, those URLs will fail. I just don’t want to lease infonuovo.com any longer. I do feel a little sentimental about it. That’s not going to stop me. Labels: DMware, ODMA, web site construction 2008-10-07Confirmable Experience: What a Wideness GainsTechnorati Tags: confirmable experience, successful communication, dependable systems, usability, cybersmith [Here’s another confirmable experience cross-posting. There’s some food for thought here for having displays that support your own productivity and enjoyment of sessions at the computer. There’s also something to be cautious about when assuming things about the ways users experience the interfaces that you implement. I know that I often design interfaces for myself, and that may be far short of what is workable for another who doesn’t approach their work in the same way and who doesn’t have the same computer setup.] Four years ago, I replaced a failing 21” CRT display with a 20” LCD monitor. The improvement was amazing. I have since upgraded my Media Center PC with a graphics card that provided DVI output and there was more improvement. But the greatest improvement came when the 20” LCD monitor recently began to have morning sickness, flickering on and off for longer and longer times before providing a steady display. Before it failed completely, I began shopping for the best upgrade on the competitive part of the LCD monitor bang-for-buck curve. These days, 24” widescreen LCD monitors are the bees knees. For almost half what I paid for the 20” LCD in 2004, I obtained a 1920 by 1080 DVI LCD (Dell S2409W) that is not quite the the same 11.75” height but is 21” wide. The visual difference is dramatic when viewing 16:9 format video and also when viewing my now-favorite screensaver. I added a shortcut to my Quick Start toolbar just to be able to watch the screensaver and listen to the bubbles while making notes at my desk. One of the problems I had with the 20” old-profile (6:4, basically) was that I could not work with multiple documents open at the same time. I don’t mind only having one fully on top, but I often needed to be able to switch between them easily. In some standards-development work that requires comparison of passages in different documents, it was also tricky to have them open in a way where I could line up the material to be compared and checked. The wider display permits having more of an application open, such as Outlook, and it also allows access to additional open material. What I hadn’t expected was the tremendous improvement that becomes available when there is a 21” task bar at the bottom of the screen. I did not expect an advantage there as the result of the wider display. That alone has made my working at the computer more enjoyable and more fluid. My desktop is still too cluttered with icons and I am still tidying them up, removing ones that I rarely use. Even so, the perimeter of the display provides for more icons on the outside of the central work area so that I can find them without having to close or move application windows. That’s another bonus. I must confess that I haven’t had so much fun since I progressed from Hercules-graphics amber monitors to full-color displays in the early 90s. It is sometimes difficult to realize that it wasn’t that long ago. Oh Yes, the Confirmable Experience …There are two confirmable-experience lessons here. First, the subjective experience I am having is mine. The wide-format monitor is an affordance for my heightened excitement and enjoyment, but the experience is mine. Others have different reactions and, in particular, have their own ideas about display real-estate, task bars, and other user-interface provisions. For the second lesson, recall how much emphasis I give to using a screen-capture utility for computer forensic and trouble-reporting work. That will often provide important out-of-band evidence for a problem that one user is seeing and that another party does not. These screen captures provide similar evidence of what the wider-format display provides for me. They don’t provide any assurance that you will see them the same way I do, however. If you click through to the full-size images, you’ll see a rendition of the same bits that my display shows me. I assure you that the image I see when replaying those bits to my screen is exactly the same as the one I took a screen capture of. There are a number of ways that your experience will be different. At the most fundamental level, there is no way to know, using these images only, to determine whether the color presented for a particular pixel on your display is the same that I see on mine. The PNG files do not reflect what I saw. They do faithfully reflect what my software and graphics card used in the internal image that was presented via my display. But we have no idea whether your computer is presenting the same color using the same bits. There are other differences of course, in that gross features may not be viewable in the same way my monitor allows me to see them (unless yours has at least the 1920 by 1080 resolution that mine does). This is all there to interfere with our sharing this particular experience of mine even without allowance for our different vision and subjectivity influences. The takeaway for this part is that context matters with regard to what qualifies as a confirmable and confirmed experience. It’s also useful to notice how many different aspects of the computer bits to displayed pixels pipeline can influence whether or not I have successfully shared relevant aspects of my experience with you. And we do manage to make it all work, most of the time, for most of us. Labels: confirmable experience, cybersmith, trustworthiness 2008-10-05Confirmable Experience: Consider the Real WorldTechnorati Tags: Clarke Ching, confirmable experience, successful communication, dependable systems, trustworthiness, cycle of learning and improvement, usability [cross-posted from Orcmid’s Lair, essentially for the reasons stated here.] Clarke Ching just posted a great illustration of a confirmable-experience situation. Until a set of comparative photographs was available to illustrate some different experiences, he and his wife did not know how to understand a difficulty that one had and the other did not (and check the follow-up for more important reality). This is the entire crux of it. I often go on about the importance of confirmable experience in the area of trustworthy and dependable systems. Providing confirmable experience is something software producers (and motivated power users) need to pay attention to. Clarke provides the Cool Hand Luke reality version. Sometimes communication is not simple and it is important to remove the barriers. I posted this on Orcmid’s Lair and I also wanted to drag it into my confirmable-experience cybersmith collection too. I want it there because it is so juicy, even though this is not my main confirmable-experience category location. Well, I think not. I will resolve it for now with cross-posting. Sometimes, I need to make a mess to know that is not the way to do it. Now I have to dig my way out of it. Labels: confirmable experience, cybersmith, interoperability, trustworthiness 2008-09-12Cybersmith: IE 8.0 Mitigation #1: Site-wide CompatibilityTechnorati Tags: cybersmith, interoperability, IE8.0 mitigation, web site construction, web standards, compatibility I have been experimenting with Internet Explorer 8.0 beta 2 enough to realize that all of my own web sites are best viewed in compatibility mode, not standards mode. I find it interesting that other browsers, such as Google Chrome, apparently apply that approach automatically, suggesting to me that the IE 8.0 standards mode is going to cause tremors across the web. The first step to obtaining immediate, successful viewing under IE 8.0, as well as older and different browsers, is to simply mark all of my sites as requiring compatibility mode. That is the least activity that can possibly work. It provides a tremendous breathing room for being more selective, followed eventually by substitution of fully-standard versions of new and heavily-visited web pages on my sites. Some pages may remain perpetually under compatibility mode, especially since the convergence of web browsers around HTML 5 support will apparently preserve accommodations for legacy pages designed against non-standard browser behaviors. This post narrates my effort to accomplish site-wide selection of compatibility mode by making simple changes to web-server parameters, not touching any of the web pages at all.

#0. The Story So FarOn installing Internet Explorer 8.0 beta 2, I confirmed that none of my web sites render properly using the default standards-mode rendering. However, my sites render as designed for the past nine years if I view them in compatibility mode. Although I want to move my high-usage pages to standards-mode over time, I don't want users of Internet 8.0 to have to manually-select compatibility mode when visiting my sites and their blog pages. What I want now is the simplest step that will advertise to browsers that my pages are all to be viewed in compatibility mode. This will direct the same presentation in IE8.0 as provided by older versions of Internet Explorer and and current browsers (such as Google Chrome) that don't have the IE8.0 standards mode. I can then look at a gradual migration toward having new and high-activity pages be designed for standards-mode viewing while other pages may continue to require compatibility mode indefinitely. #1. The Simplest First Step That Can Possibly WorkThere are ways to have web sites define the required document compatibility without having to touch the existing web pages at all. If I am able to accomplish that, I will have achieved an easy first step:

This is accomplished by convincing the web server for my sites to insert the following custom-header line in the headers of every HTTP response that the server makes:

The HTTP-response lines precede the web page that the web server returns. The browser recognizes all lines before the first empty line as headers. Everything following that empty line is the source for the web page. The browser processes the headers it is designed to recognize and ignores any others. You can see the headers returned as part of an HTTP request by using utilities such as cURL and WFetch. Here are the headers from my primary web site using a show-headers-only request via the command-line tool, cURL: 2. Satisfying the PrerequisitesThe MSDN Article on Defining Document Compatibility describes site-wide compatibility control for two web servers: Microsoft Internet Information Server (IIS) and Apache HTTP Server 1.3, 2.0, and 2.2.

The Apache 2.2 Module mod_headers documentation and Hanu Kommalapati's example describe how directory-level response headers can be specified instead.

3. Experimental Approach for Confirming mod_headers Operation

4. Web Deployment ApproachHaving direct FTP access to the web-page folders on my server, along with an out-of-the-way place to try out the change, is relatively safe. Since I am placing an .htaccess file where there presently is none, it is feasible to (1) upload the file, (2) see if it works, and (3) quickly delete it if there is any failure. Having succeeded in introducing .htaccess files for other purposes, I'm confident I can make the change correctly. I'll not do it that way. Instead, I will rely on my web-deployment-safety model and take advantage of the safety net it affords, even though I could do without it if all I wanted was experimental confirmation. This is a cybersmith post, and I want to illustrate a disciplined approach that has more flexibility in the long run. To see the result that could have been attained by using the direct approach, you can peek ahead to section 6, below, and the image just above it. Here is the structure of safeguards that I employ to control updates to my sites, keep them backed up, and also have a way to restore/move some or all of the sites. I can also roll-back changes that are incorrect or damaging. I can repair a corrupted site too (and I have had to do that in the past).

5. Authoring the .htaccess FileIf I was simply making an .htaccess page, I would create it directly in a text editor, having a file such as that created in step 4, below. That page would be saved at a convenient location-machine address and then transferred to the hosted-site using FTP, leading to the result in section 6. To preserve my development and deployment model, I require more steps.

6. Deploying the .htaccess FileHaving edited the .htaccess file on my development machine and checked it into VSS (on the development server), the next steps are all conducted on the development server:

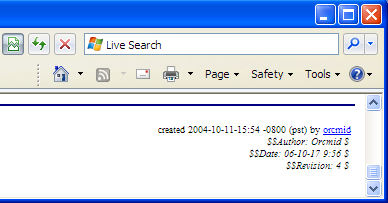

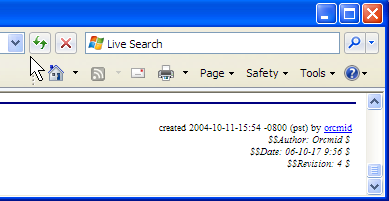

This is the same deployment procedure for updating of any of my individual sites under the hosted-site. A script for it would be useful. This is on my someday-not-now list. Scripted or not, this is the basic procedure. 7. Confirming .htaccess SuccessAssuming that the .htaccess introduction has not derailed the server, confirmation of the parameters and their success is straightforward:

8. Shampoo, Rinse, RepeatWe've demonstrated that the .htaccess customization works correctly on my web site and provides the desired result on a little-used URL that is a placeholder for work yet to come. After this cautious effort, it will be straightforward to add similar .htaccess files to each of the individual sites implemented on the hosted-site. Before that, I will first add the custom-header response to the .htaccess file that is already at public_html, the root of the main site, http://nfocentrale.com. This provides the custom header for all access. Once all site access returns the custom HTTP header, I can then take my time determining how to work toward migrating sections of web sites to pages that view properly in IE 8.0 standards mode. That will be accounted for as additional mitigation steps. 9. Tools and ResourcesThe following tools were used in this mitigation step:

Labels: cybersmith, IE8.0 mitigation, web site construction 2008-09-10DMware: OK, What's CMIS Exactly?Technorati Tags: DMware, IBM, Microsoft, CMIS, Content Management, iECM, EMC, Lotus, Sharepoint, Filenet, Open Text, OASIS, Web Services, interoperability, Document Management There's a nice flurry of interoperability news today, announcing the Content Management Interoperability Services (CMIS) Specification sponsored by EMC, IBM, and Microsoft, with the participation of other content-management vendors, including Open Text. [update: There is extensive coverage on Cover Pages. I recommend that as the comprehensive source.] Content/Document-Management Integration/Middleware Scheme for This Century?The stratospheric view from Josh Brodkin suggests that CMIS is a means for cross-over between different content-management regimes as well as bridging from content-aware applications to content-management systems. The Sharepoint Team describes CMIS as an adapter and integration model for access from content-aware applications in a CMS-neutral way, relying on distributed services via SOAP, REST, and Atom protocols. The 0.5 draft specification (2008-08-28 6.64MB Zip File download) provides a core data model for expression of managed repository entities, with loosely-coupled interface for application access to repositories via that model: "The CMIS interface is designed to be layered on top of existing Content Management systems and their existing programmatic interfaces. It is not intended to prescribe how specific features should be implemented within those CM systems, nor to exhaustively expose all of the CM system’s capabilities through the CMIS interfaces. Rather, it is intended to define a generic/universal set of capabilities provided by a CM system and a set of services for working with those capabilities." It appears that a wide variety of service integrations are possible, although the basic diagram has the familiar shape of an adapter-supported integration on the model of ODBC (and TWAIN and ODMA). Although that's the model, the integration approach is decidedly this-century, relying on relatively-straightforward HTTP-carried protocols rather than client-side integration. Clients must rely on the Service-Oriented Interface, and there is room for provision of client-side adapters to encapsulate that. Either way, this strikes me as timely and very welcome.

Next StepsThe authors have been working for two years to arrive at the draft that will now be submitted to OASIS, estimating that it will take another year to finalize a 1.0 version. [Such a Committee Draft would then go through some rounds of review before promulgation as an OASIS Standard.] I thought, at first, that this was some form of off-shoot from the AIIM Interoperable ECM (iECM) Standards Project, yet there is no hint of that in the CMIS materials nor on the iECM project and wiki pages. Announcement of the Proposed TC has just appeared at OASIS [afternoon, September 10]. OASIS Members will make any comments on the proposed charter by September 24, after which there will be a call for participation and then an initial meeting. OASIS members who want to participate in the TC can sign up after the call for participation. The initial meeting is provisionally targeted for a November 10 teleconference. The first face-to-face meeting is planned for three days of mid-January in Redmond. You can follow the charter-discuss list here to see whether there are any questions about the charter, scope, and overlaps with other efforts. Announcements, Commentary, and Resources

[update 2008-09-12T08:09Z I am having trouble getting Blogger to push updates through FTP to my site. This repost is an attempt to get the previous changes posted. update 2008-09-11T19:12Z Well, added some interesting links as deeper analysis and pontification arises. I don't expect to add more unless Dare or Tim Bray chime in. update 2008-09-11T15:43Z Use full-size CMIS diagram from EMC (via Cover Pages) update 2008-09-11T15:35Z Repair handling of images and attract attention to the Service-Oriented Interface notion employed in the CMIS diagram. update 2008-09-11T15:17Z Add link to comprehensive Cover Pages compilation. I'm also praying that Blogger's FTP update succeeds sometime in the proximity to my submitting the post. update 2008-09-11T02:37Z Add links to additional resources at EMC and to add images/videos to the page. update 2008-09-11T01:15Z Added more links and information about the OASIS Proposed Charter for the CMIS TC.] Labels: DMware, interoperability |

||||

|

You are navigating Orcmid's Lair. |

template

created 2004-06-17-20:01 -0700 (pdt)

by orcmid |

![CMIS Integration Model featuring Service-Oriented Interface [via EMC: click for full-size iamge]](http://orcmid.com/BlunderDome/clueless/images/DMwareOKWhatsCMISExactly_D3E4/F08xx14200809110839CMISEMC_thumb.jpg)